Azure Sql Database Vcore Vs Dtu

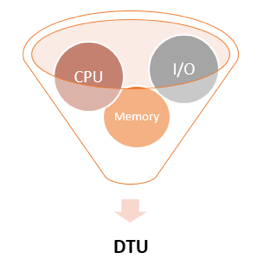

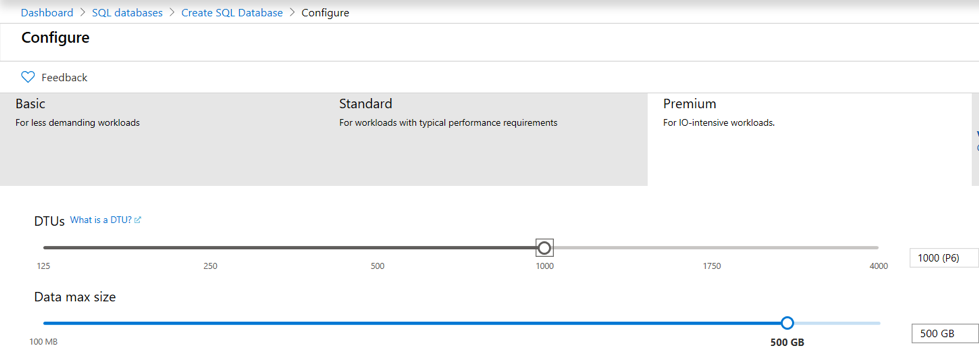

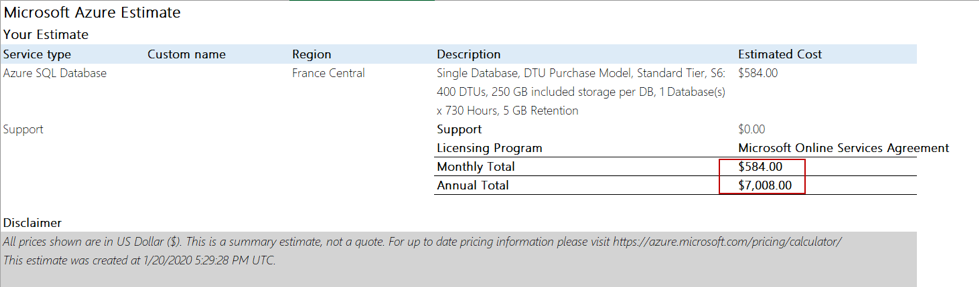

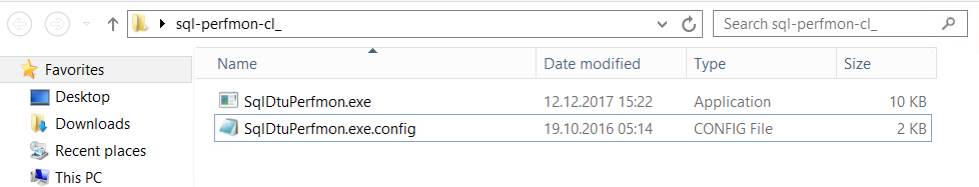

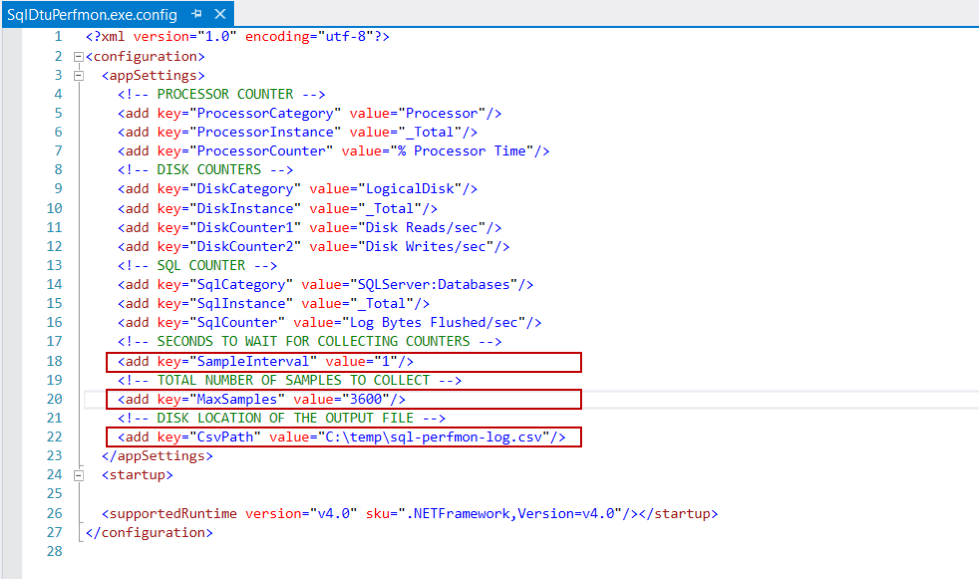

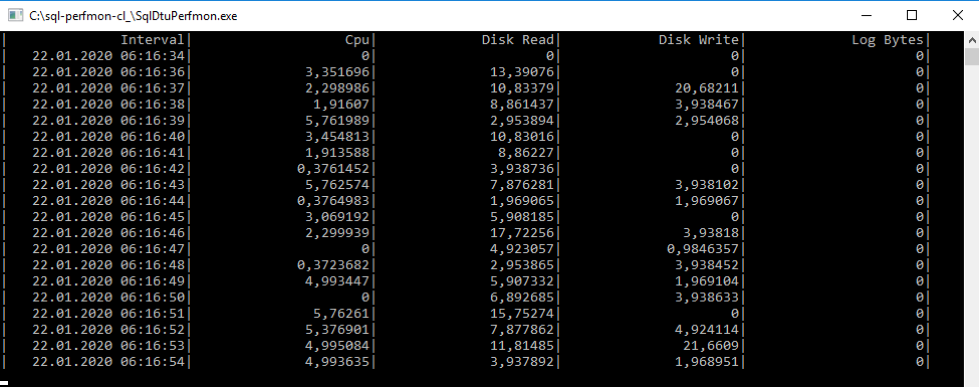

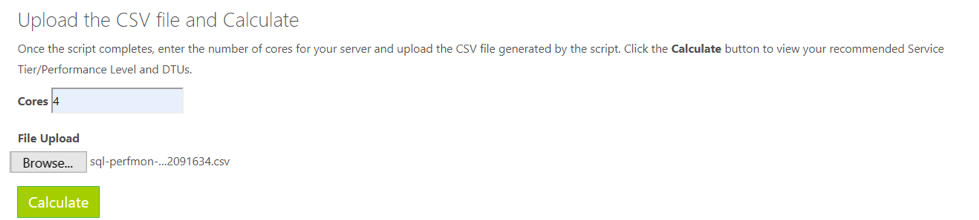

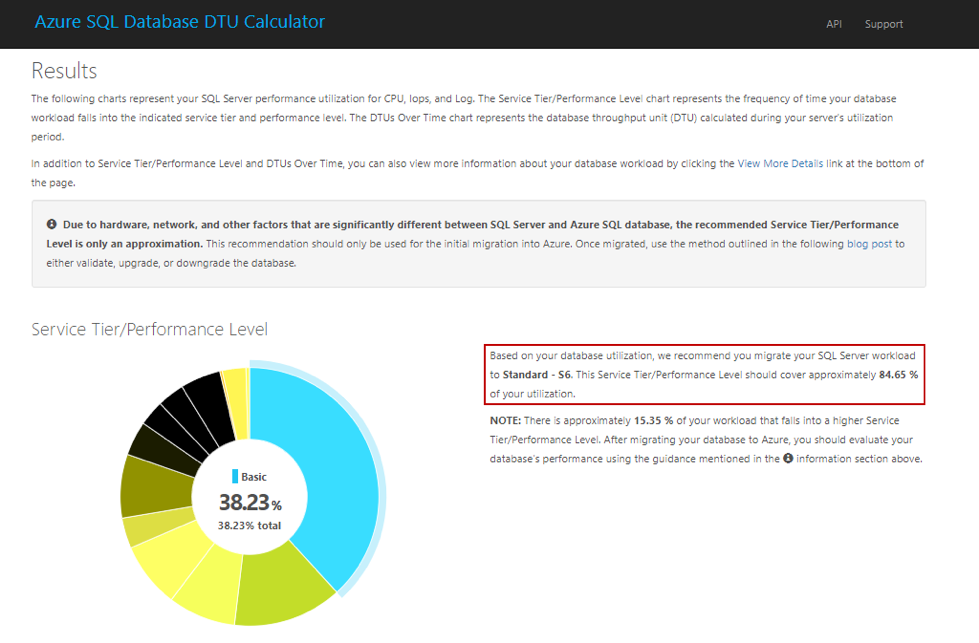

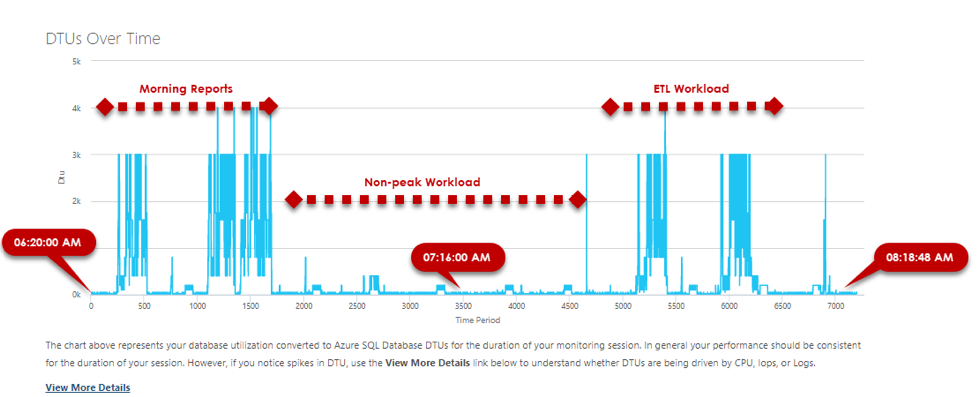

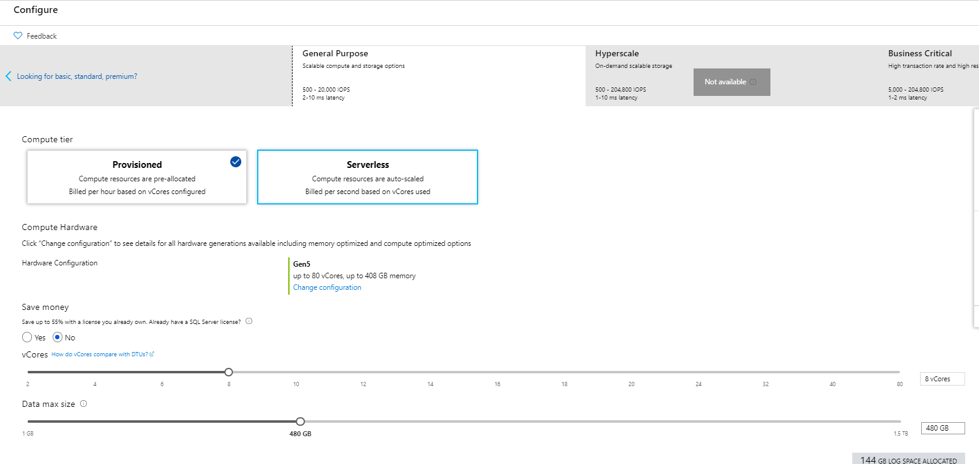

Microsoft Azure provides Platform as a Service (PaaS) Database Engine through the Azure SQL Database platform, so that we can use this database for the cloud-based applications. The main advantage of the Azure SQL Database is enabling the easy scaling with zero downtime and does not require any version upgrading or patching process. Also, we don't have to worry about hardware issues. However, the significant consideration of the Azure SQL Database is to meet the performance requirement of the deployed database against the minimum cost. Undoubtedly, nobody wants to pay money for the redundant resources or features that they do not use or plan to use. At this point, Microsoft Azure offers two different purchasing models to provide cost-efficiency: A purchasing model decision directly affects the database performance and the total amount of the bills. In my thought, If the deployed database will not consume too many resources the DTU-Based purchase model will be more suitable. Now, we will discuss the details about these two purchasing models in the following sections. In order to understand the DTU-Based purchase model more clearly, we need to clarify what does make a sense DTU in Azure SQL Database. DTU is an abbreviation for the "Database Transaction Unit" and it describes a performance unit metric for the Azure SQL Database. We can just like the DTU to the horsepower in a car because it directly affects the performance of the database. DTU represents a mixture of the following performance metrics as a single performance unit for Azure SQL Database: The main idea of the DTU concept is to offer a preconfigured resource configuration to clients so that it simplifies the scaling of the performance over a single metric. Such as, if we need more performance, we can slide the bar and increase the number of DTU in Azure SQL Database. DTU-Based purchasing model contains three different service tiers and these service tiers offer different DTUs and feature options. The following table illustrates the service tiers which have taken part in the DTU-based purchase model. Basic Standard Premium Target workload Development and production Development and production Development and production Uptime SLA 99.99% 99.99% 99.99% Maximum backup retention 7 days 35 days 35 days CPU Low Low, Medium, High Medium, High IO throughput (approximate) 1-5 IOPS per DTU 1-5 IOPS per DTU 25 IOPS per DTU IO latency (approximate) 5 ms (read), 10 ms (write) 5 ms (read), 10 ms (write) 2 ms (read/write) Columnstore indexing N/A S3 and above Supported In-memory OLTP N/A N/A Supported Maximum DTU 5 3000 (S12) 4000 (P15) Maximum Storage Size 2 GB 250 GB 1 TB As we can see, the maximum DTUs and features vary according to their service tier. Also, the pricing model will be changed relatedly to the service tier. For example, the following configuration for a single database in the DTU-Based Purchase Model will be $584.00 per month. Briefly, Elastic Pool helps us to automatically manage and scale the multiple databases that have unpredictable and varying resource demands upon a shared resource pool. Through the Elastic Pool, we don't need to scale the databases continuously against resource demand fluctuation. The databases which take part in the pool consumes the Elastic Pool resources when they are needed but they can not exceed the Elastic Pool resource limitations so that it provides a cost-effective solution. After deciding to use DTU-based purchase model, we have to find out the following question-answer with logical reasons: DTU Calculator will be the main solution to estimate the DTUs requirement when we are migrating on-premise databases to Azure SQL Database. The main idea of this tool is capturing the various metrics utilization from the existing SQL Server that affects the DTUs and then it tries to estimate approximately DTUs and service tier in the light of the collected performance utilizations. DTU calculator collects the following metrics through the either Command-Line Utility or PowerShell Script and saves these metrics to a CSV file. In this article, we will learn the usage of the Command-Line Utility because this an open-source project and codes are hosted on the GitHub. Thus, we can make changes easily if we need. After downloading and unzipping the Command-Line Utility, two files will come in front of us. SqlDtuPerfmon.exe.config helps us to determine some parameters of the Command-Line Utility: CsvPath specifies the CSV file path where the collected metrics will be stored. SampleInterval specifies how many seconds intervals the samples will be collected MaxSamples specifies the maximum number of samples that will be collected. At this point, we have to take into account some considerations about the DTU Calculator. DTU Calculator collects the total utilization of the metrics on the computer. For this reason, the other processes which affect the CPU, memory and disk consumption must be stopped otherwise it will be difficult to make an accurate DTUs estimation. Another issue is, as possible, we need to gather utilization of the metrics that cover peak workload time intervals. In this way, the DTU Calculator offers the best recommendations and we find out the maximum DTUs requirement with a more approximate estimation. Now, we will run the SqlDtuPerfmon.exe and it will directly start to collect resource utilization and save the specified CSV file. After the completion of collecting the resource utilization, we will enter the number of the cores and upload the CSV file to the DTU Calculator web site. When we click the Calculate button, firstly, Service Tier/Performance Level pie chart appears on the screen and it shows the divided estimated service tier suggestions into slices with the percentage details. According to DTU Calculator, Standard - S6 tier will provide a satisfying performance for this workload. Just below this chart, DTUs Over Time chart is shown and this chart represents the DTUs changing against the time period. Before evaluating this chart, we can add some additional pieces of information in order to interpret it more easily. As you can see, the line chart represents an unstable workload but it made more sense when we added information notes. In my thought, this chart is very useful to understand the interaction between workload changings and DTUs. Thus, we can make a more proper estimation of the required DTUs. As we mentioned at the entrance of the article, our main goal should be to find out a cost-effective solution for the workload. However, these suggestions do not express the precise requirements of the DTU in Azure SQL. For this reason, we may need to change the Service Tier or Purchase Model after the deployment of the database to Azure SQL. When we click the View More Details, some additional reports will be shown and these reports represent the individual recommendations for CPU, IOPS, and Log resource utilizations. They will be very helpful to understand particularly these utilizations. This concept is similar to the traditional approach because we are able to decide each resource of the database. We can arrange the VCores and max data size options manually in this model. However, we can not determine the memory resource. Each VCore comes with dedicated memory and the dedicated value of the memory depends on the generation of the VCores. As a last, in this model we can choose the following service tiers: In this article, we explored the purchasing models of the Azure SQL Database and we uncover the usage instructions of the DTU Calculator in order to estimate required DTU in Azure SQL for on-premise databases.

Database Transaction Unit (DTU)-Based purchasing model

Elastic Pool

Properly Estimation of the DTU for Azure SQL Database

Virtual core (vCore)-Based Purchasing Model

Conclusion

Azure Sql Database Vcore Vs Dtu

Source: https://www.spotlightcloud.io/blog/what-is-dtu-in-azure-sql-database-and-how-much-do-we-need